What we learned in the field with the Etcd Operator.

Executive Overview ( tl;dr )

The Etcd Operator is a wonderful tool for automating the process of churning out stable, functional Etcd clusters. It can also handle backups and recovery with some manual work. At the end of the day, this is the tool of choice for using Etcd in production.

However, as with most things in the IT field, a little chaos can teach us a lot about what’s really going to happen in a production environment. Here’s the high level on what we learned:

- The Kubernetes operator is often advertised as “operations in a can.” That’s mostly true, however, in this case, the operator isn’t going to do some of the things we often expect when failures happen.

- For the most part, a 3-node cluster is capable of handling most failure conditions in most clouds. However, elastic activity can have a dramatic impact on uptime.

- Both of these points lead to using node affinity and carefully planning the rollout of a production cluster.

Chaos Engineering

This is absolutely my favorite topic of cloud operations. CE is an invaluable tool for teaching us how things are going to blow up in production, or really any other environment. Fancy tools have been created to test a variety of different scenarios, but in my experience tweaking an AutoScalingGroup ( ASG ) can be just as powerful and a lot easier to pull off.

For the purpose of this article, we’re going to focus on what happens specifically to the Etcd pods and the service when we’re performing elastic operations. The scenario we’re covering is rolling nodes in a cluster from a large size to a smaller size.

Part of a good CE run ( at least in my opinion ) is planning around the human element. We lovely humans make any number of creative, wonderful mistakes, which is why we make the best Chaos Agents.

In our case we’re using AWS with EKS built out with Terraform ( >12 ).

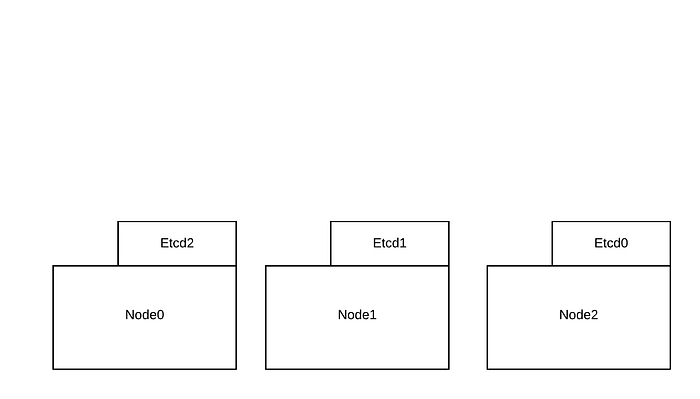

The Etcd operator doesn’t, by default, schedule based on node affinity. This means that the Etcd pods can be scheduled on any available node. In some cases, as we’ll see below, 2 Etcd pods can be scheduled on the same node.

Node Affinity

A quick note on why node affinity is so important when it comes to things that are clustered together.

Here’s a view of the layout using node affinity:

And without affinity:

This will come into play later when we talk about removing nodes in a cluster.

Rolling Nodes

During the rollout of our first few EKS clusters, we decided to overbuild with large compute ( m4.10xlarge ) instances. This was mainly because we wanted plenty of compute room for our software to grow. In some other cases, I usually recommend going with smaller first, then growing as needs be. However, in the world of Machine Learning and perception pipelines, bigger was the way to go.

Eventually, we wanted to scale these down as we learned that we’re not using as much of the compute as we needed. We also want to be smart about our cloud spend. We decided to migrate off the m4.10xlarge instances to the smaller m4.2xlarge instances.

In this case, we wanted to do the modifications by hand with the ASG and leave the terraform stuff alone for now. We created the new LaunchConfig, and tied it to the ASG, then increased the ASG to double its current capacity. We moved from Min/Max/Desired of 3/3/3 to 6/6/6.

Our thinking at the time was that everything in the cluster would handle the rescale operation gracefully. After all, we’re using Kube, right? Nodes could go do, and services are rescheduled accordingly, right? Well, that’s mostly true.

Our stack has a few interesting things running on it:

- 2 different PostgreSQL pods that each handle a different function.

- Keycloak

- Etcd ( using the operator )

- Prometheus ( again, using the operator )

- In-house code running on a few pods

Almost everything deployed using Helm. Now let’s look at how we handled the elastic operation:

- Wait for the new, smaller instances to come up.

- Terminate the old instances.

Lots of people love trashing the AWS ECS service because it seems more complicated than Kube, but here’s the deal. If we were using ECS, this operation wouldn’t require the additional step of draining the nodes via kubectl because EC2 is tied to the target group, which is tied to the scheduler on the cluster.

Since we’re using Kube, we didn’t have a 100% smooth cut over on this operation. Which is fine, the solution here would have been to go a little slower and drain the nodes first.

The reason I choose to do things in this “brute force” kind of way is mainly because a real-world production outage isn’t going to drain nodes or be nice about how things go down. In this case, we wanted to learn what we could from something that was close enough to a real-world disaster as we could manage, while we’re all in the office paying close attention.

What we learned is that the Etcd operator is more like a junior admin, at best. This operator doesn’t rebuild the cluster, or bark at Slack about the outage, it simply dies, and never comes back.

As it turns out, this is intended behavior as per the developers of the project. We now have a need for watching for this particular service via Sensu or something else that can tell us when our Etcd service has gone away.

For us, this was an absolutely silent failure for us. Additionally, we learned that the Etcd service that is deployed isn’t using node affinity. We didn’t just delete the old nodes right way, we started with 1 large node first, then lost the cluster immediately. We had 2 pods running on the same node, which just so happened to be the 1 node I started with.

This was an invaluable lesson because it taught us that we can lose a critically important backend service by loosing a single EC2 node, even though we’re using the coveted Kubernetes. As it turns out, Kube doesn’t actually solve all problems, we still have to be watchful and aware of our DevOps and SRE strategies.

Conclusion

My first assumption while troubleshooting this was to yell and scream ( internally of course ) at the Etcd operator. As a team, we learned that the operator is about as good as it could be. The failure here was in my understanding of this particular beast.

The outcome of this learning was to drastically change how we build out our new generation of EKS clusters. Instead of using 3 large nodes, we’re using 6–10 smaller nodes ( m4.large or thereabout ) and enabling node affinity for the Etcd clusters that the operator kicks out. We’re also setting the default size of any Etcd cluster to 6. This should allow us to loose up to 4 nodes in the cluster without losing quorum.

So far we’ve found this to be a much more stable profile. In our testing of this new profile, we can easily do ASG changes ( now with Terraform! ) of any kind without actually losing the cluster.

At the end of the day, DevOps is really all about smashing stuff in a safe way to learn what’s going to break first before we get to production and life does the smashing for you.